Introduction

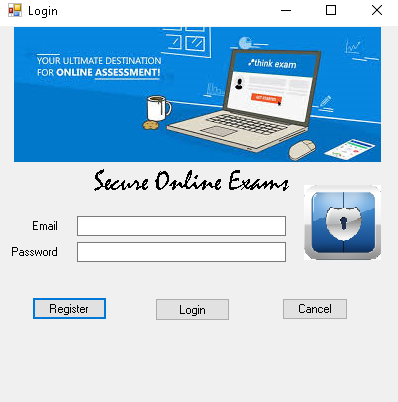

An MSc project with the title Student Examination System, where the objective is to put the students in an examination condition but instead of having an invigilator in an examination center, the system will cater for the proper ongoing of the exam. the system can be used as an online examination system

- Recognizing the face shape of a particular student

- Detect if there is more than one person in the examination room

- Analyze the typing pattern of a student and detect if any one person is taking part in the exam

- voice recognition for the student and detect if there is more than one person speaking in the examination room

Setup

Download Emgu CV from http://www.emgu.com/wiki/index.php/Main_PageDownload Haarcascade from https://github.com/opencv/opencv/tree/master/data/haarcascades

Create an account at https://www.keytrac.net/

Face recognition

The snippet below illustrates how the Emgu CV is loaded when the application started and when click on capture how the face pattern is processed and save in the database

Typing recognition

- Enrollment

Enrollment process where a unique user ID is generated and the typing pattern is recognized and associated with that user ID

- Pattern authentication

when the students are taking part in the exam. their typing pattern will be analyzed using the API of KeyTrac and return response in terms of score. if the score is above 50 then this indicates that the student who claims to be is taking part in the exam

Testing

- Registration face pattern

the screenshot below demonstrates how the application is able to detect the face shape on an individual.

Once the user clicks on the capture button the application will process the face shape of the user

once the user face shape is processed and recognized, the name of the user will appear every time the individual is using the application

- Enroll typing pattern

the next process for registration is typing recognition. In short, the user will have the type the text appearing above the textbox. each keystroke and typing pattern will be converted into an alphanumeric character.

- Two faces appearing

After the registation process is done, when the user will be logged into the system to take part in the exam, in case two faces are detected by the system the exam will be aborted as exam condition was not meat

cool project, nice job

ReplyDeleteYour website is very beautiful or Articles. I love it thank you for sharing for everyone. Facial Recognition Software

ReplyDelete